Consistency is the missing piece of my image gen puzzle

I have spent a lot of time recently experimenting with local AI image generation.

At this point, image generation has become very good, capable of creating beautiful detailed images.

However, if you want to use the images for something where you need to create consistent characters and styles, such as games or comics, you face a problem.

Simply using the same description of your character will result in big variations between generations.

I am generating the following images in ComfyUI. The model is Flux Dev.

Prompt: Cartoon of a man with brown hair and green eyes and a short beard. It is a fantasy medieval setting and the man is wearing a brown cape and leather armor underneath. In the background are rolling green hills.

Prompt: Cartoon of a man with brown hair and green eyes and a short beard. It is a fantasy medieval setting and the man is wearing a brown cape and leather armor underneath. He is holding a sword and fighting a dragon.

Neither the art style nor the character are the same in these two generations, even though the input is very similar.

So how can we solve this? Well the internet has no shortage of possible solutions.

Just use LoRA!

This is by far the most common answer.

However, to make a LoRA, you need data. This is fine if you want a character that already has lots of existing images.

In this case, I want to make more images of my original character.

And as shown above, getting 20+ consistent images of a character just using prompting is near impossible.

Just use img2img!

This is actually the opposite of what we want. img2img is good at tweaking details or styles. But what we want is the same details and style but different composition.

Just use IP-Adapter/Subject Adapter/Style Adapter!

First of all, adapting the style of the image helps, but not much.

While it does solve the problem of inconsistent styles, the details of the characters face, clothing etc. will still be different.

It is possible to use USO with Flux which provides a "subject adapter". This works well for some usecases, but falls apart when changing the composition.

Let's try to make our guy face away from the viewer.

New prompt without adaption: Cartoon of a man with brown hair and green eyes and a short beard. It is a fantasy medieval setting and the man is wearing a brown cape and leather armor underneath. He is standing with his back to the viewer and his arms raised. In the background is a town with a cobbelstone street.

Same prompt but with the original image as input. The details are faithful to the original, but the model wants to draw him from the front because that was the original composition.

Just use Image Edit!

I find that this actually works quite well. Image edit is very powerful, and can draw a character in completely different positions and settings while keeping details.

Let's try to edit the image using Qwen.

The original image.

Qwen image edit prompt: Make the man face away from the viewer with raised hands curled into fists. In the background is a town with a cobbelstone street.

The problem with using Image edit is that you are limited to the capabilites of the edit model.

This means that if you are using a somewhat specialized model, you might lose the style when makings significant edits.

In the following example, I generated an image in a sort of watercolor style and then edit it using Qwen.

New generation with a distinct style.

Qwen image edit: The man is standing on top of a dragon thrusting a sword into the dragon. In the background is a battlefield with abandoned weapons and fires. Watercolor style.

He is standing on top of the dragon alright, but he is definitely not thrusting his sword into it.

This looks pretty good, but if you look closely, it is not the exact same style.

Let's try another distint style

Image generated in 90s anime style.

Qwen image edit: The woman is wearing a business suit with a black jacket. She is smiling broadly with eyes half closed. In the background is an office. 90s anime style.

Qwen stays faithful to the original style.

This approach generally works great when using a style that both models know about.

Conclusion

I find that for creating consistent characters, clothing etc. edit models work best.

Or perhaps there is some combination of methods that I don't know about yet. Just generating high-quality one-off images can get complicated if you want something specific.

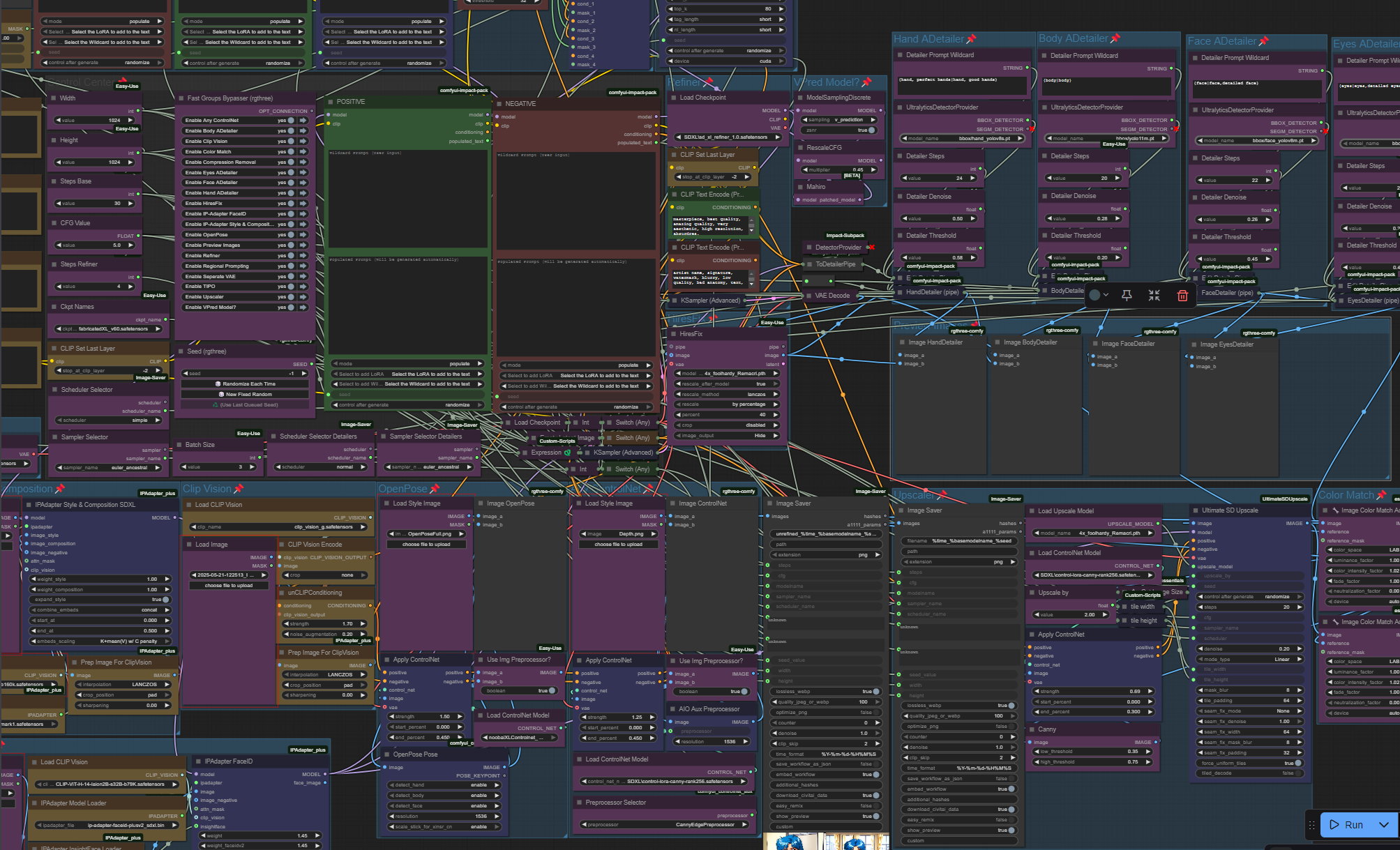

Pictured: the workflow I used to generate the anime-style image.

Anyway, I am excited to see where this technology is going. If the improvements keep coming at the current pace, these problems might be history in just a few years.